Latest Articles

-

AI Consciousness "Awakening"! Turing Award Winner Bengio's Bold Statement: AI is Approaching a Critical Point

In a thought-provoking article in Science, Turing Award winner Yoshua Bengio and Eric Elmoznino explore the possibility of artificial intelligence achieving consciousness, a concept once relegated to science fiction. Their work delves into the philosophical and scientific arguments surrounding computational...

other -

Mastering LLM Agents: Anthropic's Official Guide to Tool Development

Anthropic Unveils Official Guide to Crafting High-Performance Tools for LLM Agents. As the era of AI agents dawns, the quality and efficiency of their tools become paramount. Anthropic has released a comprehensive official tutorial detailing best practices for developing and optimizing tools.

technology -

Billionaire Shakes Up Tech Elite: Oracle's Ellison Reclaims World's Richest Title, Fueled by AI Boom

Silicon Valley icon Larry Ellison reclaims the title of world's richest person, with his net worth soaring to $393 billion, eclipsing Elon Musk. Fueling this monumental rise is Oracle's explosive growth in cloud computing and a groundbreaking $300 billion AI infrastructure deal with OpenAI.

other -

Thinking Machines Lab Unveils the Truth Behind LLM Inference Non-determinism

Thinking Machines Lab has published its first deep-dive blog post, revealing that the true cause of LLM inference nondeterminism is not floating-point math or concurrency, but the lack of batch invariance. Their custom kernels finally make reproducible LLM inference possible.

technology -

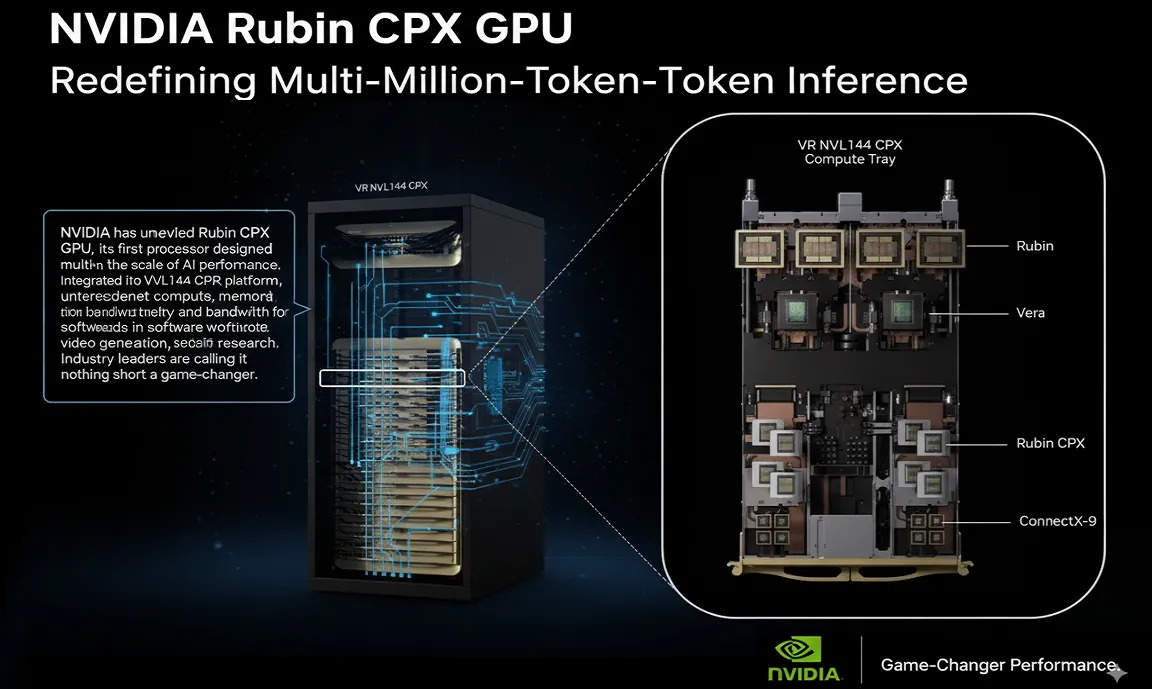

NVIDIA Unveils Next-Gen Rubin CPX GPU: Multi-Million Token Inference, A True Beast

NVIDIA has unveiled the Rubin CPX GPU, its first processor designed for multi-million-token inference, redefining the scale of AI performance. Integrated into the Vera Rubin NVL144 CPX platform, it delivers unprecedented compute, memory, and bandwidth for long-context workloads in software development...

nvidia -

Billions to Use Free AGI! OpenAI’s Altman Warns of Global Deflation and Gigawatt Power Demands Despite DeepSeek

OpenAI CEO Sam Altman envisions a future where billions of people use free AGI, triggering extreme global deflation as efficiency and surplus wealth reshape the economy. From personal AI assistants to enterprise superteams, and from fusion-powered energy to gigawatt-scale demand, Altman argues that AGI will...

agi -

Hinton’s Prediction Falls Short? AI Skills Now Drive 23% Pay Premium—Outpacing a Master’s Degree

While some warn AI will widen inequality, fresh data shows the opposite for skilled workers: AI expertise now delivers salary premiums of up to 23%—far outpacing the return on a master’s degree. From finance to marketing, AI fluency is becoming the most valuable currency in the workplace.

other