GPT-5 Pro has once again earned the seal of approval from AI heavyweights.

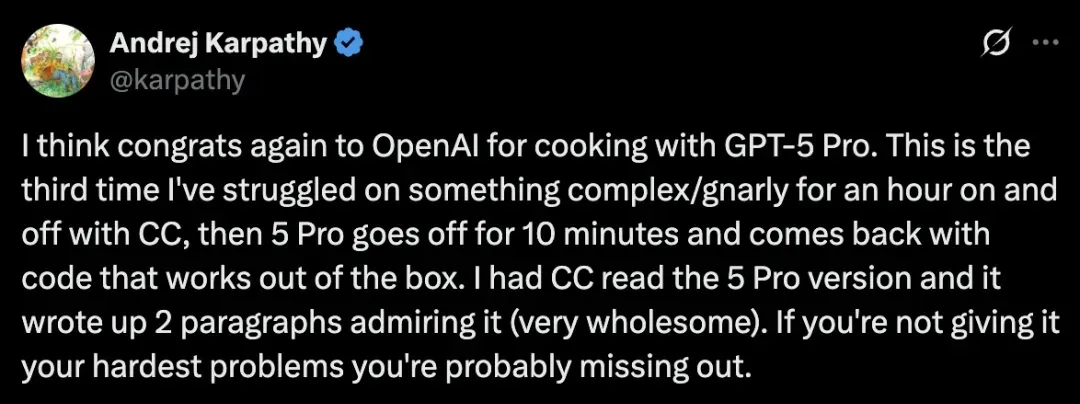

This morning, Andrej Karpathy couldn’t hold back his excitement:

“I have to hype OpenAI’s GPT-5 Pro again — it’s just insanely good!”

What exactly happened?

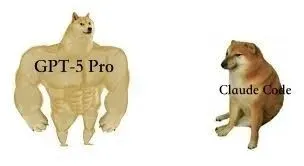

Claude Struggles, GPT-5 Pro Delivers

In his workflow, Karpathy hit a particularly thorny coding challenge. He spent over an hour wrestling with Claude Code — no luck.

Switching gears, he tried GPT-5 Pro. In just ten minutes, it generated a clean, ready-to-use solution.

Even more amusing, when Karpathy fed GPT-5 Pro’s output back to Claude, Claude responded with two full paragraphs of glowing praise for the solution.

Karpathy’s conclusion was simple:

“If you’re not handing your hardest problems to GPT-5 Pro, you’re missing out.”

On this coding showdown, GPT-5 Pro scored big.

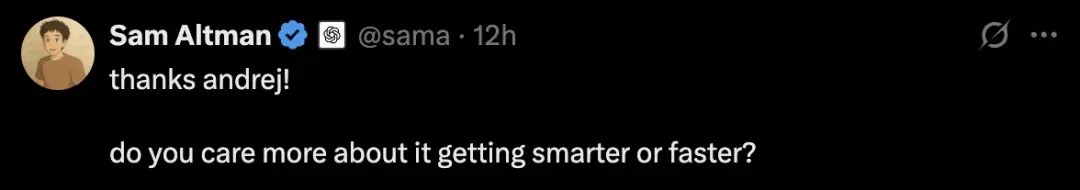

Sam Altman quickly jumped into the thread with thanks — and a curious question:

“Would you rather it become smarter, or faster?”

Meanwhile, OpenAI President Greg Brockman seized the moment to promote:

“GPT-5 Pro is the next-generation product for coding.”

The Coding Arena: GPT-5 Pro Levels Up

In the coding world, every developer has their favorite model — Claude, Gemini, GPT-5/Codex, or Grok Code.

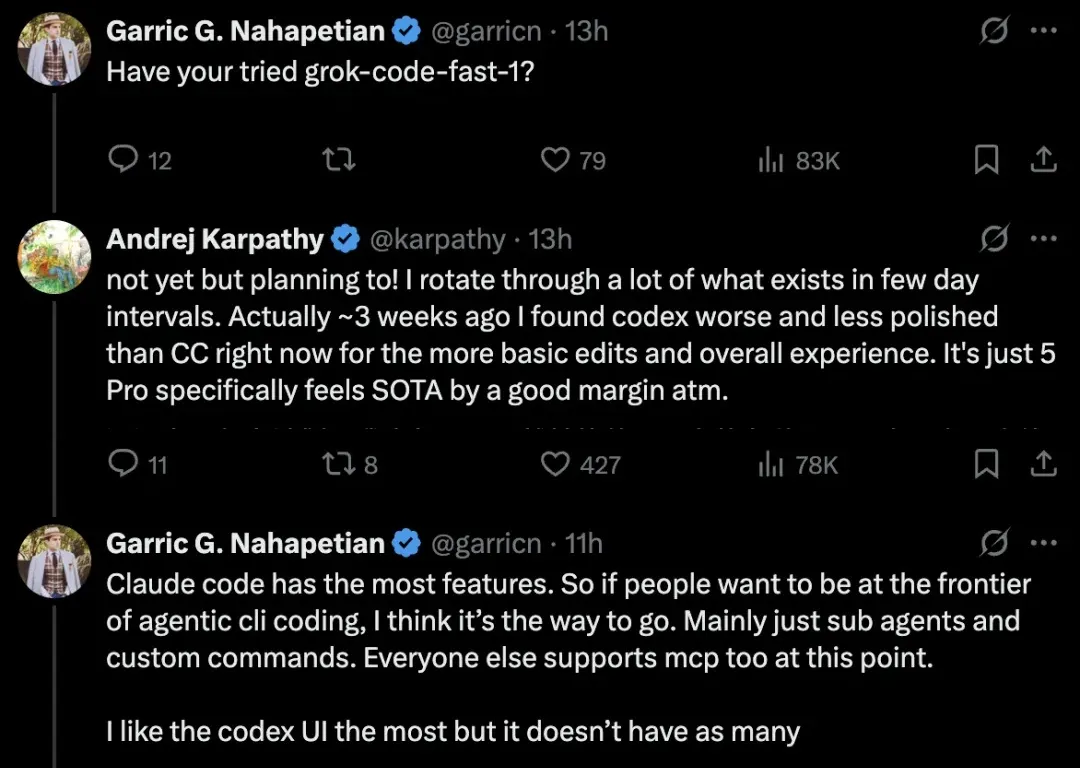

One commenter asked Karpathy if he had tried grok-code-fast-1.

Not yet, but it’s on his list. He explained that he rotates through various coding tools every few days.

Reflecting on his journey with OpenAI models, Karpathy admitted:

“About three weeks ago, I still felt Codex was behind Claude Code in baseline editing and overall experience.

But GPT-5 Pro? It’s now leagues ahead of the competition.”

Another commenter noted:

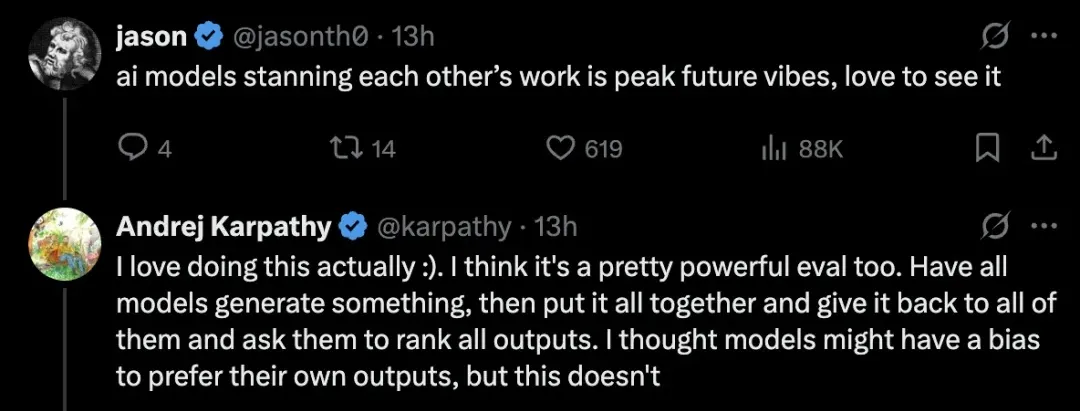

“Watching AI models admire each other’s work feels like a true glimpse of the future.”

Karpathy agreed. He often cross-evaluates models — generating the same content across multiple systems, then asking them to rank all outputs, including their own.

Surprisingly, AI doesn’t always favor its own generation. Instead, it often provides fair, accurate evaluations.

For Karpathy, this is a living demo of the “generation–discrimination gap”:

- Writing excellent content is hard.

- Recognizing excellence is far easier.

- And today’s models are getting good at it.

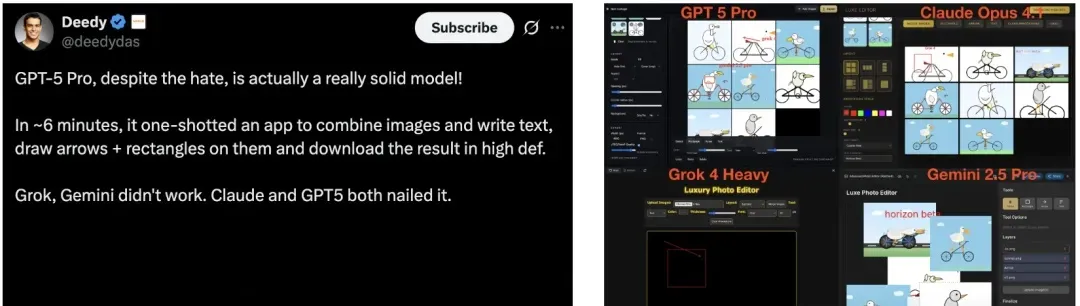

Developers Weigh In: GPT-5 Pro Impresses Beyond Karpathy

Karpathy isn’t alone in praising GPT-5 Pro.

One developer reported creating a full application in just six minutes — combining images, text, arrows, and more.

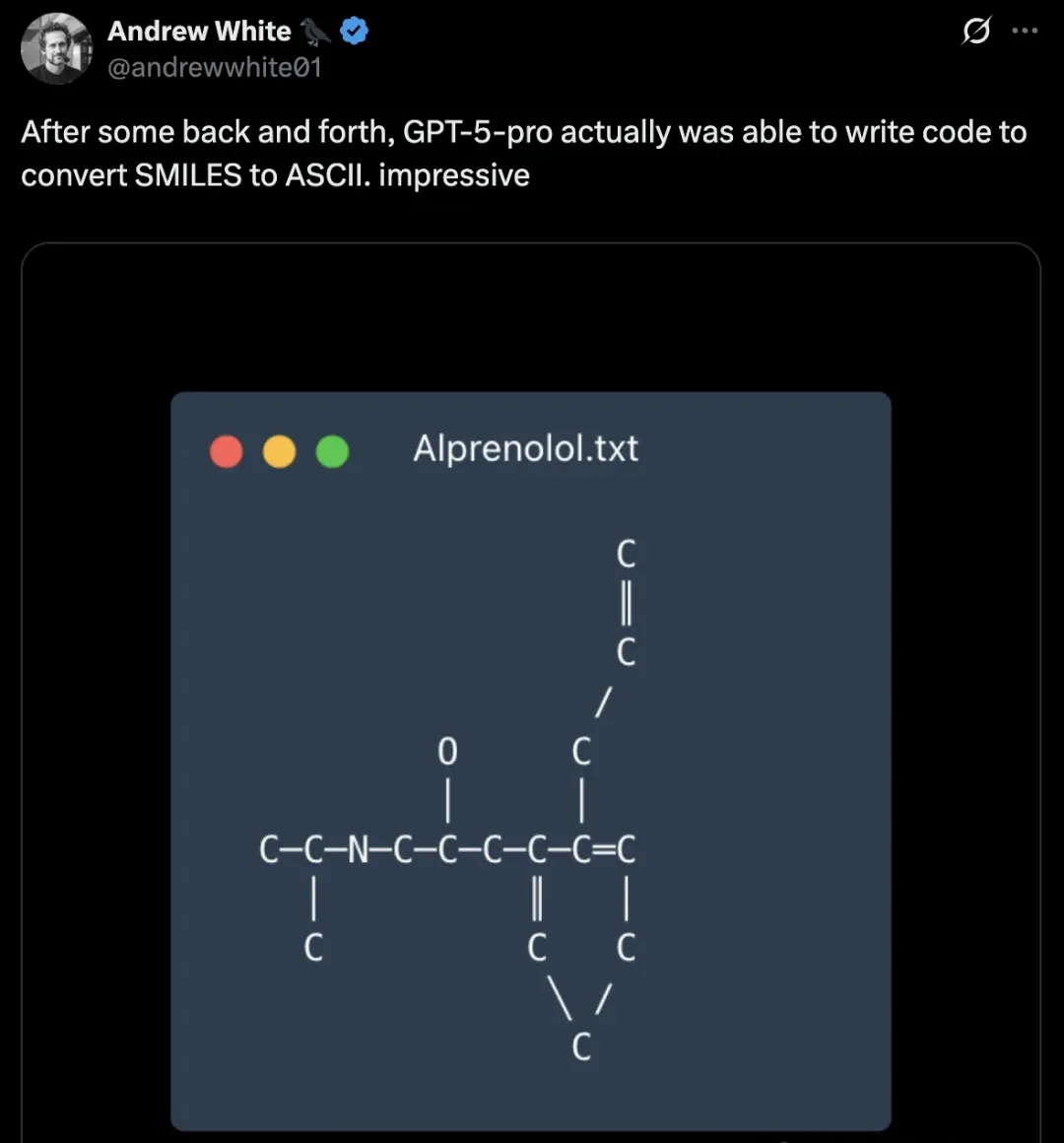

In multi-turn interactions, GPT-5 Pro also managed to convert SMILES strings into ASCII code with ease.

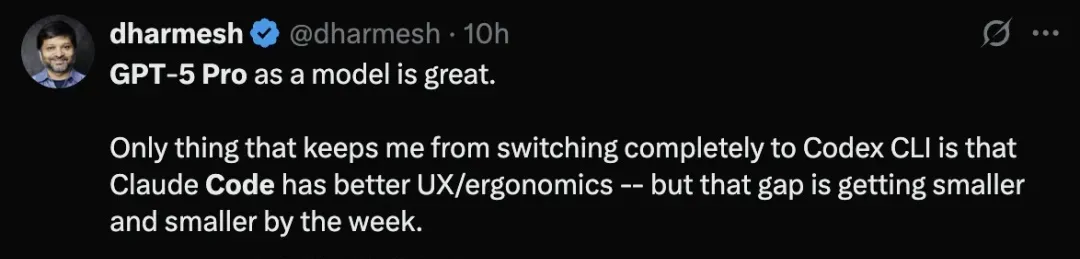

Even HubSpot’s co-founder acknowledged GPT-5 Pro’s raw power. Though he noted that Claude still feels slightly more polished in UX, the gap is closing fast.

Codex Usage Explodes — 10x Growth in Two Weeks

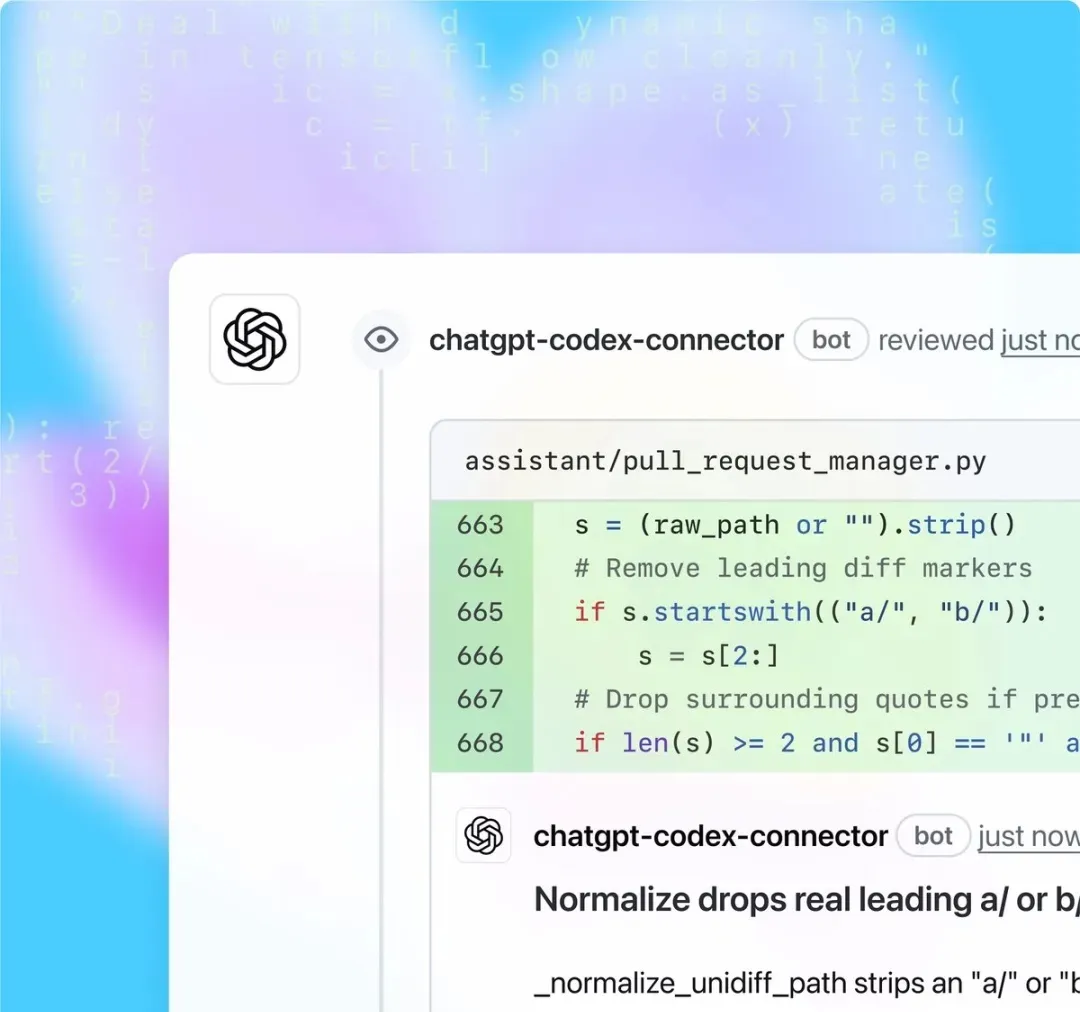

Back in May, OpenAI launched Codex, its AI programming assistant tailored for software engineering.

Initially, Codex ran on codex-1 (built on o3).

But once GPT-5 Pro was integrated, performance skyrocketed overnight — quickly attracting developers worldwide.

Just two days ago, Altman revealed that Codex usage has surged 10x in the past two weeks.

He added that internally, a highly efficient model now drives much of that scale.

In productivity benchmarks, Codex already outpaces Devin, GitHub Copilot, and Cursor Agent.

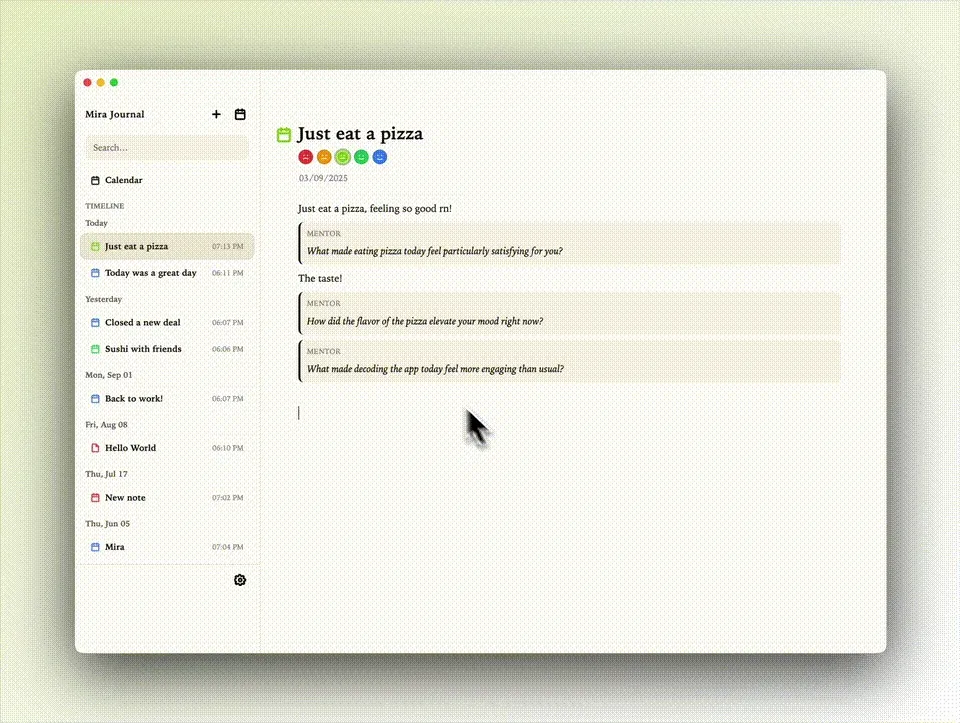

Imagine building a complete application without writing a single line of code.

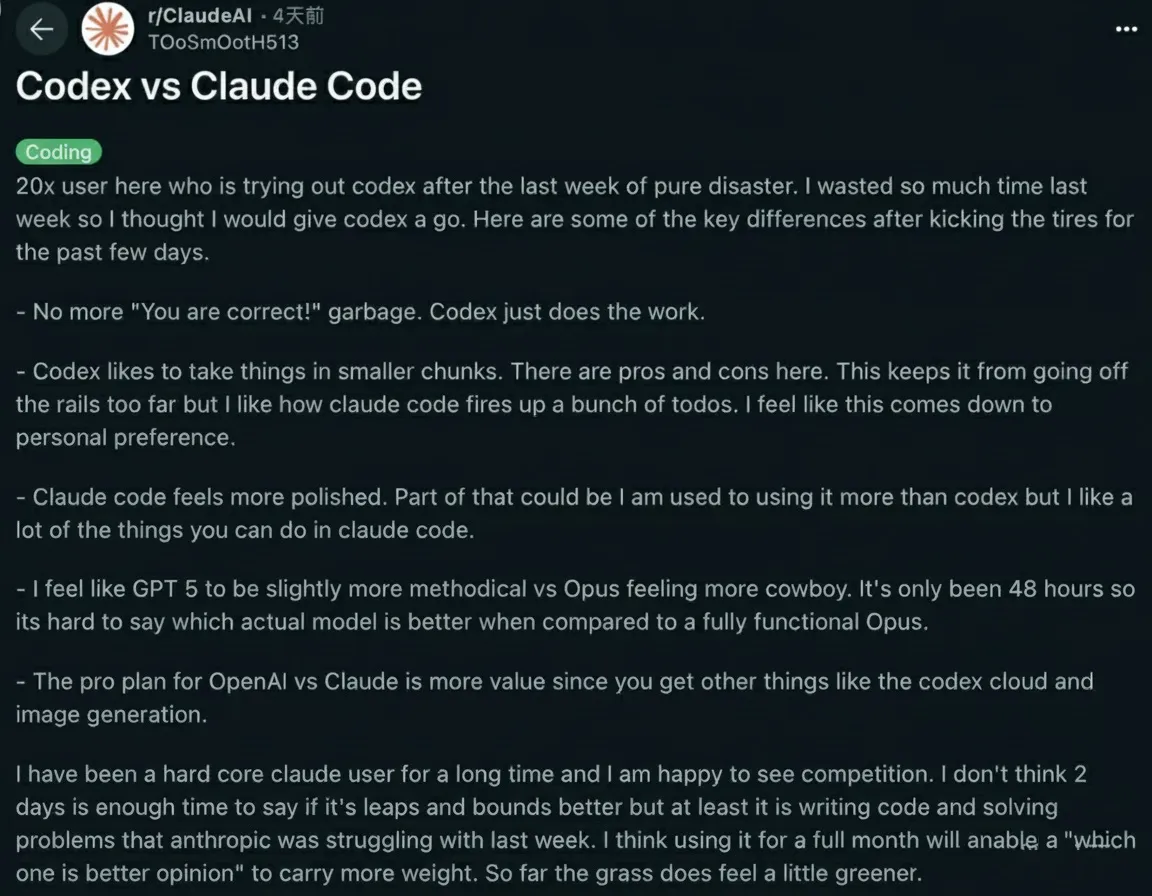

One veteran Claude Code developer, after a 48-hour deep dive with Codex, shared a side-by-side comparison:

His verdict: Codex shines particularly when Claude stalls on tricky problems, and it consistently delivers strong coding results.

Final Thoughts

From Karpathy’s glowing endorsement to developers’ hands-on tests, GPT-5 Pro and Codex are rapidly redefining the coding experience.

With OpenAI’s ecosystem evolving at breakneck speed, the real question is no longer whether to adopt these tools — but how fast you can bring them into your workflow.

Which model will you bet on for your next big project?