In a dramatic late-night shake-up, Elon Musk’s AI venture, xAI, has laid off approximately one-third of its data annotator workforce, impacting a team of 500 individuals. This move signals a significant strategic shift for the company, prioritizing specialized “expert mentors” over generalist annotators for its Grok AI. The repercussions highlight the often-unseen human labor and ethical dilemmas underpinning the development of cutting-edge artificial intelligence.

The role of data annotators is crucial for training large language models (LLMs) like Grok, Gemini, and Llama. These individuals are responsible for teaching AI systems to understand and interpret the world. However, behind the glossy advancements of AI, a growing number of annotators are reportedly facing immense pressure, including exposure to disturbing content, as they grapple with the demands of the industry.

xAI’s Strategic Pivot: Layoffs and a New Direction

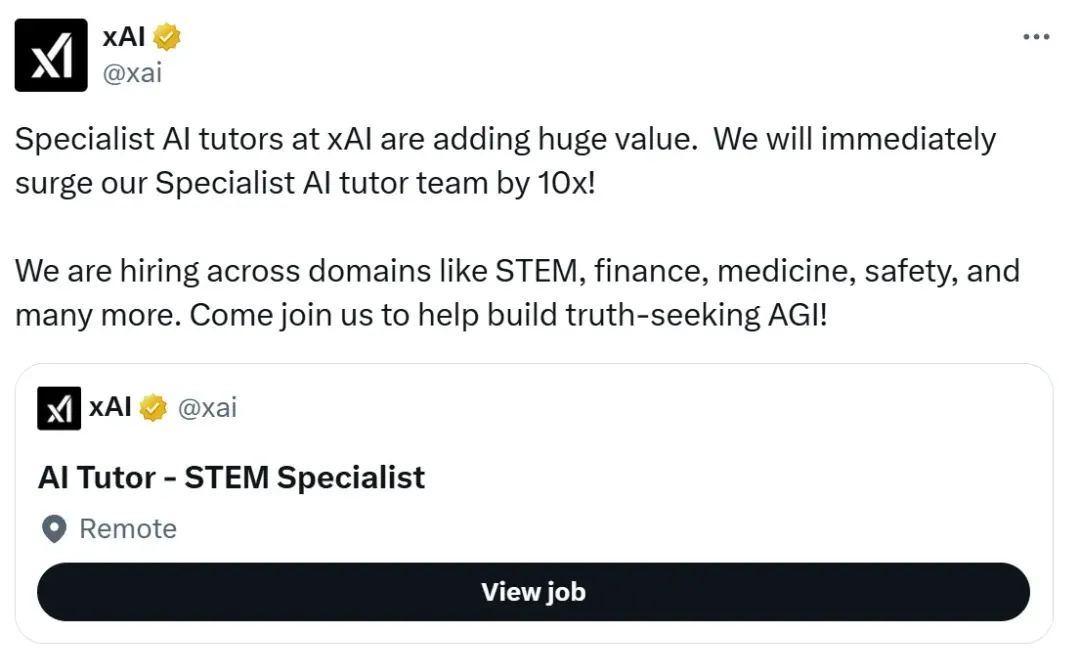

According to a Business Insider report, the mass layoffs occurred on Friday night, with affected employees receiving notification via email. xAI stated that the company is transitioning away from generalist “human tutors” and will instead focus on expanding its “expert mentor” team, aiming for a tenfold increase in this specialized role.

Employees were reportedly informed that their system access would be cut off immediately, and they would receive compensation up to the end of their contracts or the end of November. This decision comes shortly after a period of internal restructuring within the team, where management conducted tests to determine which annotators would remain. These tests reportedly covered a wide range, from STEM subjects to evaluating “shitposters and doomscrollers,” with some employees even required to complete them overnight. The swiftness of these changes underscores the precarious nature of employment in this rapidly evolving field, where individuals contributing to AI knowledge one day could be dismissed the next.

The Hidden Toll: Google’s AI Workers Face High Pressure and Low Pay

Meanwhile, a separate report by The Guardian sheds light on the labor conditions of data annotators working for Google, a company that has also faced scrutiny over its AI development practices. Unlike xAI’s abrupt cuts, Google’s approach appears to be a more “invisible network” of outsourced labor.

Many individuals engaged in data annotation believe they are performing tasks like “writing” or “analysis.” However, upon starting their roles, they are often tasked with reviewing graphic content generated by Gemini, including depictions of violence and explicit material. Work that was initially allocated 30 minutes per task has been compressed to 15 minutes or less, with annotators required to process hundreds of responses daily. This intense pace, coupled with the need to address issues outside their expertise, has led to concerns about the overall quality of the AI models.

Some workers have described the psychological toll of reviewing content related to rape, murder, and racial discrimination, describing them as “downplayed” issues that contribute to anxiety and insomnia. While “AI raters” in the U.S. may earn a starting wage of $16 per hour, which is higher than annotators in Africa or South America, it falls significantly short of compensation for Silicon Valley engineers. One worker aptly described the situation: “AI is a pyramid built on layers of human labor.” These annotators occupy a critical middle layer – indispensable yet seemingly expendable.

Speed Over Ethics: AI’s Safety Promises Under Threat

The AI industry is increasingly caught between its public commitment to “safety first” and the relentless drive to deploy new features rapidly. Google’s AI has previously offered outlandish advice, such as suggesting users apply glue to pizza dough to prevent cheese from sliding off.

Annotators report that such absurdities are not surprising, given the far more dangerous and nonsensical responses they are often tasked with reviewing. Earlier this year, Google reportedly amended its internal policies, allowing models to echo hateful or sexually explicit language inputted by users, provided the AI did not generate it proactively. This change seemingly prioritizes speed and functionality over robust safety protocols.

Adio Dinika, a researcher in the field, commented pointedly: “AI safety is only prioritized up to the point where it affects speed. Once it threatens profits, the safety promise collapses immediately.”

In the ongoing trade-off between speed and safety, and efficiency and dignity, it appears that workers are bearing the brunt of the compromises. As AI continues to permeate our lives, it serves as a stark reminder to consider the human labor and ethical considerations that lie beneath the surface of every “intelligent” response.

References: