How do you really know whether an AI model or developer tool has improved?

The simplest test is also the hardest: use it for a full day of real work.

Recently, Anthropic closed a $13 billion funding round, pushing its valuation to $183 billion. It’s the largest raise in AI this year—second only to OpenAI’s record-shattering $40 billion in March.

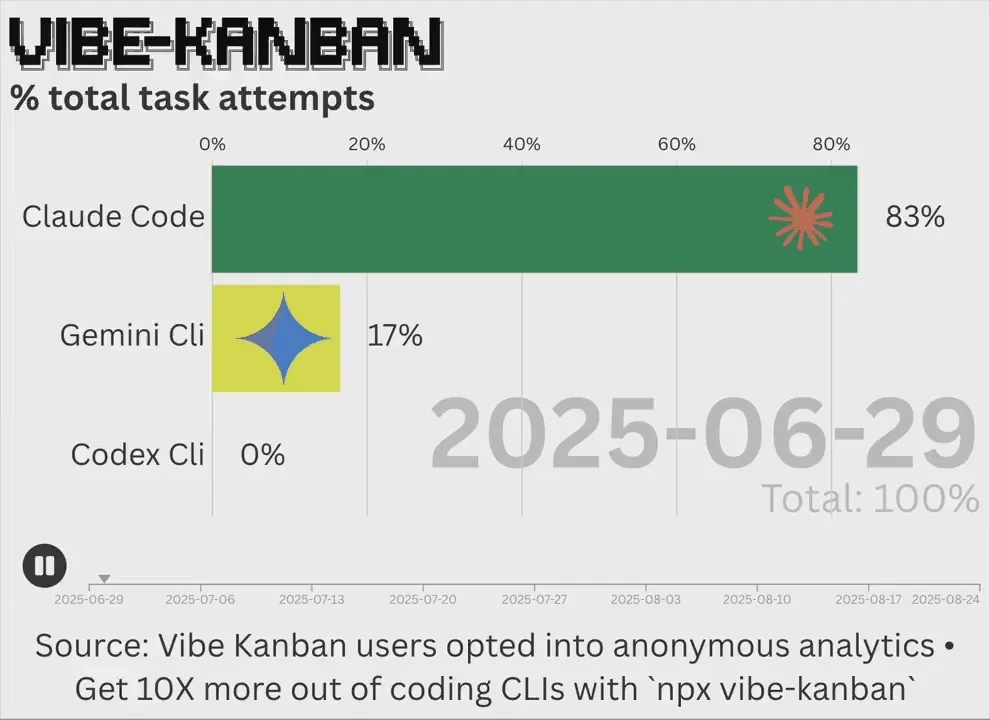

But hype brings scrutiny. Some users complain that Anthropic’s flagship product, Claude Code, occasionally “dumbs down.” Others have started experimenting with Codex CLI, OpenAI’s rival product.

Yet, despite the noise, Claude Code has proven itself a breakout hit. In just four months post-launch, it attracted over 115,000 users, pulling many away from Cursor.

What’s driving this traction? In a recent interview, Boris Cherny, Claude Code’s product lead, pulled back the curtain. His team’s philosophy is refreshingly pragmatic:

- Radical simplicity in design

- Deep extensibility as a core value

- Real-world use over artificial benchmarks

- A culture of instant feedback loops and fixes

Coding, One Year Ago vs. Now

A year ago, coding workflows were piecemeal: an IDE with autocomplete, some copy-paste from a chatbot, and lots of manual glue.

Today, AI agents are fully embedded in daily development. They don’t just suggest a line or two—they can handle massive code rewrites, run autonomously, and sometimes build entire apps end-to-end.

It’s less about micromanaging edits and more about delegating intent: tell the agent what you want, then trust it to execute.

Why Did This Shift Happen?

Two bottlenecks used to hold this back:

- Model quality – early LLMs couldn’t sustain autonomy.

- Scaffolding – the frameworks wrapped around models weren’t mature.

Over the past year, both changed fast. Upgrades like Sonnet 3.7, Sonnet 4, and Opus 4.1 extended reliability. Meanwhile, Claude Code became the harness for the horse—integrating prompts, context management, tool calls, MCP connectivity, and permissions.

Models and tools have since been co-evolving, accelerating the shift toward agent-native development.

Dogfooding at Anthropic: Everyone Uses Claude Code

Inside Anthropic, Claude Code isn’t optional—it’s mandatory. Even the researchers building the models rely on it for their daily work.

That kind of dogfooding means weaknesses are exposed quickly. Early on, Sonnet 3.5 could only run autonomously for a minute before derailing. Today, newer models last much longer with far fewer interventions.

This creates a tight loop between human experience and model improvement—bugs and friction points get baked back into training, closing the gap between “research lab” and “real world.”

Forget Benchmarks. The Real Test Is Work.

How do you evaluate a new model or feature? Cherny’s answer:

“Do your actual job with it.”

Daily coding, debugging, reading GitHub issues, responding to Slack—these workflows create a far more honest signal than any benchmark can.

Benchmarks are rigid. Work is messy. And that mess is exactly what reveals whether a tool is truly getting better.

Feedback at Firehose Speed

One of Anthropic’s secret weapons is how fast they close the feedback loop.

“Sometimes I’ll spend hours just crushing bug reports as quickly as possible—then immediately tell users their issue is fixed. That keeps the feedback firehose flowing.”

That culture of immediacy has turned Claude Code’s internal feedback channel into something like a constant, high-pressure stream. It’s chaotic—but it fuels rapid iteration.

Where Claude Code Is Today: Extensibility as Philosophy

Claude Code has always aimed for two things: extreme simplicity and limitless extensibility.

Its evolution looks like this:

- Then: A humble

CLAUDE.mdfile to add project context - Now: Rich systems—permissions, hooks, MCP integration, slash commands, sub-agents

And because the models themselves have improved (longer runs, sharper memory, more autonomy), these extensibility hooks have become dramatically more powerful.

The Road Ahead: 6–12 Months of Fusion Workflows

The next era of Claude Code will blur the line between manual coding and agent autonomy.

- Interactive programming: You don’t edit text directly; you instruct Claude to make changes.

- Proactive programming: Claude initiates tasks, reviews itself, and you decide whether to accept.

- Goal-driven programming: Within 12–24 months, Claude will focus less on line-by-line execution and more on long-term objectives—like an engineer working toward monthly milestones.

This shift reframes the developer’s role from coder to strategist.

Advice for Developers in the Agent Era

Before AI agents, the JavaScript stack alone was intimidating: React, Next.js, multiple build and deploy systems.

Now, the entry barrier has collapsed. With agents, you can start building from an idea—not a stack.

Cherny’s advice:

- Still master the fundamentals: programming languages, runtimes, compilers, systems design.

- But lean into creativity: in an age where code can be endlessly rewritten, ideas matter more than syntax.

Tips for New Claude Code Users

-

Ask questions before writing code

Instead of jumping into generation, use Claude as an explorer:- “How would I add logging here?”

- “Why was this function designed like this?”

-

Match strategy to task complexity

- Simple tasks: Just @Claude in a GitHub issue to generate a PR.

- Medium tasks: Collaborate in plan mode, then hand execution to Claude.

- Hard tasks: Stay in the driver’s seat—use Claude for research, prototyping, and boundary testing, but own the core implementation.

The key: adapt Claude’s role to the problem—don’t use one-size-fits-all workflows.